Bachelor/Master Theses, Semester Projects, and DAS DS Capstone Projects

If you are interested in one of the following topics, please send an email to Prof. Bölcskei and include your complete transcripts. Please note that we can not respond to requests that are sent directly to PhD students in the group or do not contain your transcripts.

These projects serve to illustrate the general nature of projects we offer. You are most welcome to inquire directly with Prof. Bölcskei about tailored research projects. Likewise, please contact Prof. Bölcskei in case you are interested in a bachelor thesis project.

Also, we have a list of ongoing and finished theses on our website.

List of Bachelor Projects (BA)

List of Semester Projects (SP)

- Acoustic sensing and trajectory estimation of objects flying at supersonic speed (with industry)

- Building probability models from scattering networks

- A new framework for learning governing differential equations

- Why transformers cannot learn maths?

List of Master Projects (MA)

- Dynamical systems on big metric spaces

- Geometry of extraction and simplification of Boolean formulae

- Learning cellular automaton transition rules with transformers

- Explaining the learnability of certain Boolean functions

- The "logic" behind transformers

- A new framework for learning governing differential equations

- Generating singular distributions through neural networks

- Why transformers cannot learn maths?

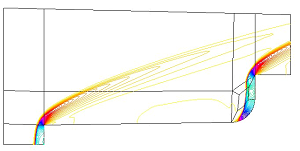

Acoustic sensing and trajectory estimation of objects flying at supersonic speed (with industry) (SP)

The goal of this project is to investigate new techniques for the task at hand, such as linearization of non-linear systems of equations, least squares fitting, and neural network driven machine learning. Existing hardware and algorithms provide a starting point for the project, which will be carried out in collaboration with an industry partner called SIUS (located in Effretikon, Zurich). SIUS offers close supervision and the possibility to use hardware and a test laboratory.

About the industry partner: SIUS is the world’s leading manufacturer of electronic scoring systems in shooting sports. The company is specialized in producing high speed and high precision measurement equipment capable of measuring projectile position and trajectory and has been equipping the most important international competitions including the Olympic Games for decades.

Type of project: 20% literature research, 20% theory, 50% implementation/programming, 10% experiments

Prerequisites: Solid mathematical background, knowledge of SciPy, Matlab or a similar toolset, ideally knowledge on (deep) neural networks

Supervisor: Michael Lerjen, Steven Müllener

Professor:

Helmut Bölcskei

References:

[1] SIUS Homepage [Link to Document]

Dynamical systems on big metric spaces (BA/MA)

Recent work [3] established that, for measure-preserving dynamical systems on compact metric spaces, the metric mean dimension agrees with the rate–distortion dimension. This equivalence is conceptually and technically significant: upper bounds on metric mean dimension, which are typically difficult to derive directly, can instead be obtained via rate–distortion arguments.

The objective of the present project is to extend the approach of [3] to dynamical systems defined on large metric spaces—such as generalized Hilbert cubes or Wasserstein spaces—that admit a power-exponential scaling structure [4].

Type of project: 100% theory

Prerequisites: Strong mathematical background, measure theory

Supervisor: Erwin Riegler

Professor:

Helmut Bölcskei

References:

[1] T. Berger, "Rate distortion theory: A mathematical basis for data compression," Englewood Cliffs, N.J., Prentice-Hall, 1971.

[2] E. Riegler, G. Koliander, and H. Bölcskei, "Lossy compression of general random variables," Information and Inference: A Journal of the IMA, vol. 12, no. 3, pp. 1759–1829, 2022. [Link to Document]

[3] E. Lindenstrauss and M. Tsukamoto, "From rate distortion theory to metric mean dimension: Variational principle," IEEE Transactions on Information Theory, vol. 64, no. 5, pp. 3590–3609, 2018. [Link to Document]

[4] B. Kloeckner, "A generalization of Hausdorff dimension applied to Hilbert cubes and Wasserstein spaces," Journal of Topology and Analysis, vol. 4, no. 2, pp. 203–235, 2012. [Link to Document]

Building probability models from scattering networks (SP)

The goal of this project is to study how well the probability measure obtained via the scattering network method [3] approximates the true probability measure. Our focus is on understanding the impact of the network design, particularly the choice of the filters, on the approximation error.

Type of project: 100% theory or 60% theory and 40% programming

Prerequisites: Strong mathematical background

Supervisor: Konstantin Häberle

Professor:

Helmut Bölcskei

References:

[1]

S. Mallat, “Group invariant scattering,” Communications on Pure and Applied

Mathematics, vol. 65, no. 10, pp. 1331–1398, 2012.

[Link to Document]

[2] T. Wiatowski and H. Bölcskei, “A mathematical theory of deep convolutional neural networks for feature extraction,” IEEE Transactions on Information Theory, vol. 64, no. 3, pp. 1845–1866, 2018. [Link to Document]

[3] J. Bruna and S. Mallat, “Multiscale sparse microcanonical models,” Mathematical Statistics and Learning, vol. 1, no. 3, pp. 257–315, 2018. [Link to Document]

Geometry of extraction and simplification of Boolean formulae (MA)

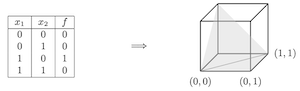

A Boolean function 𝑓: {0, 1}n → {0, 1} can be represented in various formats, a truth table of size 2n where each row stores an input-output pair, a Karnaugh map [1], and a circuit consisting of Boolean logic gates [2, Sec. 12.3]. Boolean formulae can be manipulated through the application of the Boolean axioms [3]. Manipulating Karnaugh maps and Boolean circuits is typically more challenging. Extracting Boolean formulae from representations of Boolean functions in other formats is hence of fundamental importance.

The goal of this project is to develop a geometric approach to the extraction and manipulation of Boolean formulae. The idea you will build on is based on the insight that Boolean truth tables can be interpolated linearly through simplex subdivision of the hypercube [0, 1]n to continuous piecewise linear functions [4, 5]. You will apply algorithms for the extraction of Boolean formulae from these continuous piecewise linear functions [6, 7, 8]. Further, you will try to develop a methodology for the symbolic manipulation of Boolean formulae by working directly with their corresponding continuous piecewise linear functions. You will also study the literature on simplex interpolation [4, 5, 9, 10].

Type of project: 100% theory,

Prerequisites: Knowledge in Boolean logic

Supervisor: Yani Zhang

Professor:

Helmut Bölcskei

References:

[1]

M. Karnaugh, “The map method for synthesis of combinational logic circuits,” Transactions of the American Institute of Electrical Engineers, Part I: Communication and Electronics, vol. 72, no. 5, pp. 593–599, 1953.

[Link to Document]

[2] K. H. Rosen, Discrete Mathematics and Its Applications, New York: McGraw-Hill, 2012. [Link to Document]

[3] C. E. Shannon, “The synthesis of two-terminal switching circuits,” The Bell System Technical Journal, vol. 28, no. 1, pp. 59–98, 1949. [Link to Document]

[4] R. Rovatti, M. Borgatti, and R. Guerrieri, “A geometric approach to maximum-speed n-dimensional continuous linear interpolation in rectangular grids,” IEEE Transactions on Computers, vol. 47, no. 8, pp. 894–899, 1998. [Link to Document]

[5] A. Weiser and S. E. Zarantonello, “A note on piecewise linear and multilinear table interpolation in many dimensions,” Mathematics of Computation, vol. 50, no. 181, pp. 189–196, 1988. [Link to Document]

[6] Y. Zhang and H. Bolcskei, “Extracting formulae in many-valued logic from deep neural networks,” IEEE Transactions on Signal Processing, Mar. 2025, submitted. [Link to Document]

[7] D. Mundici, “A constructive proof of McNaughton’s theorem in infinite-valued logic,” The Journal of Symbolic Logic, vol. 59, no. 2, pp. 596–602, 1994. [Link to Document]

[8] S. Aguzzoli, “Geometrical and Proof Theoretical Issues in Lukasiewicz Propositional Logics,” PhD thesis, University of Siena, Italy, 1998.

[9] J. A. D. Loera, J. Rambau, and F. Santos, Triangulations: Structures for Algorithms and Applications, Springer, 2010.

[10] N. Zhang, K. Canini, S. Silva, and M. Gupta, “Fast linear interpolation,” ACM Journal on Emerging Technologies in Computing Systems (JETC), vol. 17, no. 2, pp. 1–15, 2021.

Learning cellular automaton transition rules with transformers (MA)

A cellular automaton (CA) is a discrete dynamical system consisting of a regular lattice in one or more dimensions with cell values taken from a finite set. The cells change their states at synchronous discrete time steps based on a transition rule [1]. Despite the simplicity of the CA model, it can exhibit complex global behavior. With suitably chosen transition rules, cellular automata can simulate a plethora of dynamical behaviors [2, 3]. The inverse problem of deducing the transition rule from a given global behavior is extremely difficult [4]. In this project, you will investigate the possibility of training transformers [5] to learn CA transition rules.

Type of project: 30% theory, 70% implementation

Prerequisites: Good programming skills, knowledge in machine learning

Supervisor: Yani Zhang

Professor:

Helmut Bölcskei

References:

[1]

J. Kari, “Theory of cellular automata: A survey,” Theoretical computer science, 334(1-3):3–33, 2005.

[Link to Document]

[2] T. Toffoli and N. Margolus, “Cellular automata machines: A new environment for modeling,” MIT press, 1987. [Link to Document]

[3] A. Adamatzky, “Game of life cellular automata,” vol. 1, Springer, 2010. [Link to Document]

[4] N. Ganguly, B. K. Sikdar, A. Deutsch, G. Canright, and P. P. Chaudhuri, “A survey on cellular automata,” 2003. [Link to Document]

[5] A. Vaswani, et al., “Attention is all you need,” Advances in Neural Information Processing Systems 30, 2017. [Link to Document]

Explaining the learnability of certain Boolean functions (MA)

Application of the main theorem in [1] to MSBₙ yields an upper bound on the number of training steps which is polynomial in 1/ε and 2n, and thus exponential in n. In contrast, experiments indicate that MSBₙ can, in fact, be learned to within precision ε in a number of steps polynomial in both 1/ε and n. This sharp discrepancy between theoretical bounds and empirical performance suggests that MSBₙ has a richer internal structure not captured by the staircase property. The aim of the present project is to investigate this structure theoretically, with the goal of explaining the experimental observations.

Type of project: 90% theory, 10% simulation

Prerequisites: Basic knowledge in statistics, Fourier analysis, graph theory, Boolean logic

Supervisor: Valentin Abadie

Professor:

Helmut Bölcskei

References:

[1]

E. Abbe et al. "The staircase property: How hierarchical structure can guide deep learning," Advances in Neural Information Processing Systems 34, 2021.

[Link to Document]

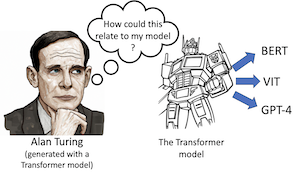

The "logic" behind transformers (MA)

In this project, you will familiarize yourself with literature on links between Turing machines and neural networks. You would then establish direct connections between the transformer architecture and Turing machines, with the goal of understanding better how transformers simulate Turing machines.

Type of project: 100% theory

Prerequisites: Knowledge in neural network theory and theoretical computer science, appetite for theory in general

Supervisor: Valentin Abadie

Professor:

Helmut Bölcskei

References:

[1]

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, "Attention is all you need," Advances in Neural Information Processing Systems, 2017.

[Link to Document]

[2] S. Bhattamishra, A. Patel, and N. Goyal, "On the computational power of transformers and its implications in sequence modeling," arXiv preprint arXiv:2006.09286, 2020. [Link to Document]

[3] H. T. Siegelmann and E. D. Sontag, "On the computational power of neural nets," Journal of Computer and System Sciences, vol. 50(1), pp. 132–150, 1995. [Link to Document]

A new framework for learning governing differential equations (MA/SP)

In this project, you will study a new framework for unique and stable identification of governing ordinary differential equations from solution data. Specifically, you will design and implement algorithms based on this new framework and test their efficiency. If time permits, you will also extend the theoretical results of this new framework to partial differential equations.

Type of project: 70% programming and 30% theory

Prerequisites: Knowledge in differential equations and machine learning, good programming skills

Supervisor: Yang Pan

Professor:

Helmut Bölcskei

References:

[1]

S. L. Brunton, J. L. Proctor, and J. N. Kutz, “Discovering governing equations from data: Sparse identification of nonlinear dynamical systems,” Proceedings of the National Academy of Sciences, pp. 3932–3937, 2016.

[Link to Document]

[2] M. Holler and E. Morina, “On uniqueness in structured model learning,” arXiv preprint, arXiv:2410.22009, 2024. [Link to Document]

[3] Z. Long, Y. Lu, X. Ma, and B. Dong, "PDE-Net: Learning PDEs from data," Proceedings of the 35th International Conference on Machine Learning, pp. 3208–3216, 2018. [Link to Document]

[4] R. Molinaro, Y. Yang, B. Engquist, and S. Mishra, "Neural inverse operators for solving PDE inverse problems," arXiv preprint, arXiv:2301.11167, 2023. [Link to Document]

[5] S. H. Rudy, S. L. Brunton, J. L. Proctor, and J. N. Kutz, "Data-driven discovery of partial differential equations," Science advances, vol. 3, no. 4, 2017. [Link to Document]

[6] P. Scholl, A. Bacho, H. Boche, and G. Kutyniok, "The uniqueness problem of physical law learning," IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5, 2023. [Link to Document]

[7] P. Scholl, A. Bacho, H. Boche, and G. Kutyniok, "Symbolic recovery of differential equations: The identifiability problem," arXiv preprint, arXiv:2210.08342, 2022. [Link to Document]

Generating singular distributions through neural networks (MA)

The goal of this project is to derive results on the generation of singular, e.g., fractal, distributions through a set of neural networks of cardinality depending on the Hausdorff dimension of the probability distribution.

Type of project: 100% theory

Prerequisites: Strong mathematical background, measure theory

Supervisor: Erwin Riegler

Professor:

Helmut Bölcskei

References:

[1] D. Perekrestenko, L. Eberhard, and H. Bölcskei, "High-dimensional distribution generation through deep neural networks," Partial Differential Equations and Applications, Springer, vol. 2, no. 64, pp. 1–44, Sep. 2021.

[Link to Document]

[2] Y. Yang, L. Zhen, and Y. Wang, "On the capacity of deep generative networks for approximating distributions," Neural Networks, vol. 145, no. C, pp. 144–154, Jan. 2022. [Link to Document]

[3] K. Falconer, "Fractal geometry: Mathematical foundations and applications," Wiley, 2nd ed., 2003. [Link to Document]

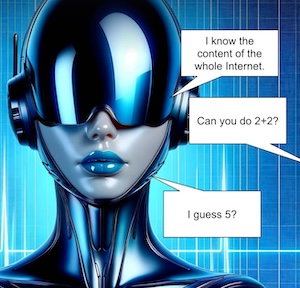

Why transformers cannot learn maths? (MA/SP)

In this project, you will study which part of the learning process hinders the realization of simple mathematical operations. Specifically, you will implement the transformer architecture simulating Turing machines in [2], and you will try to reach this architecture through a learning algorithm.

Type of project: 100% simulation

Prerequisites: Knowledge in neural network theory and good programming skills

Supervisor: Valentin Abadie

Professor:

Helmut Bölcskei

References:

[1]

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, "Attention is all you need," Advances in Neural Information Processing Systems, 2017.

[Link to Document]

[2] S. Bhattamishra, A. Patel, and N. Goyal, "On the computational power of transformers and its implications in sequence modeling," arXiv preprint arXiv:2006.09286, 2020. [Link to Document]

[3] R. Nogueira, Z. Jiang, and J. Lin, "Investigating the limitations of transformers with simple arithmetic tasks,” arXiv preprint arXiv:2102.13019, 2021. [Link to Document]

[4] F. Charton, ”Can transformers learn the greatest common divisor?,” arXiv preprint arXiv:2308.15594, 2023. [Link to Document]